Robotics, Machine Learning

Hello! I'm Ray, a PhD student at the Robotics Institute, Carnegie Mellon University, where I'm advised by Prof. Michael Kaess. My research focuses on the intersection of robotics, SLAM, and machine learning, with particular emphasis on state estimation, sensor fusion, and autonomous navigation in challenging environments. Currently, I'm developing millimeter-wave radar-based odometry and mapping systems to enable robust perception in adverse environmental conditions.

Before my study in CMU. I graduated from National Chiao Tung University where I received my B.S. and a fifth year M.S. degrees. I was advised by Prof. Hsueh-Cheng Wang and worked on the DARPA SubT Challenge during my time in NCTU. I'm also fortunate to visit Prof. Jenq-Neng Hwang's research group in University of Wahington and worked on multi-object tracking for autonomous driving using millimeter-wave radar.

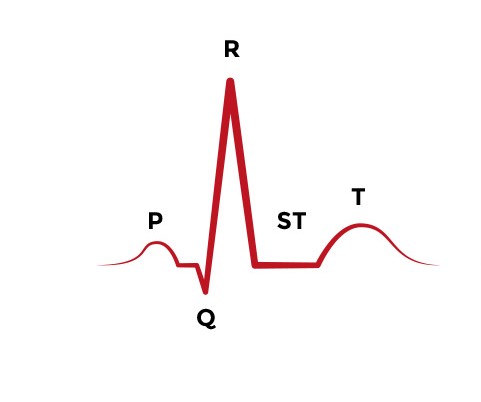

Multi-Radar Inertial Odometry for 3D State Estimation using mmWave Imaging Radar

IEEE ICRA 2024

Jui-Te, Huang, Ruoyang Xu, Akshay Hinduja, and Michael Kaess

In this paper, we address Doppler velocity measurement uncertainties. We present a method to optimize body frame velocity while managing Doppler velocity uncertainty. Based on our observations, we propose a dual imaging radar configuration to mitigate the challenge of discrepancy in radar data. To attain high-precision 3D state estimation, we introduce a strategy that seamlessly integrates radar data with a consumer-grade IMU sensor using fixed-lag smoothing optimization. Finally, we evaluate our approach using real-world 3D motion data.

Paper Video More...Vision meets mmWave Radar: 3D Object Perception Benchmark for Autonomous Driving

IEEE Symposium on Intelligent Vehicle 2024

Yizhou Wang, Jen-Hao Cheng, Jui-Te Huang, Sheng-Yao Kuan, Qiqian Fu, Chiming Ni, Shengyu Hao, Gaoang Wang, Guanbin Xing, Hui Liu, Jenq-Neng Hwang

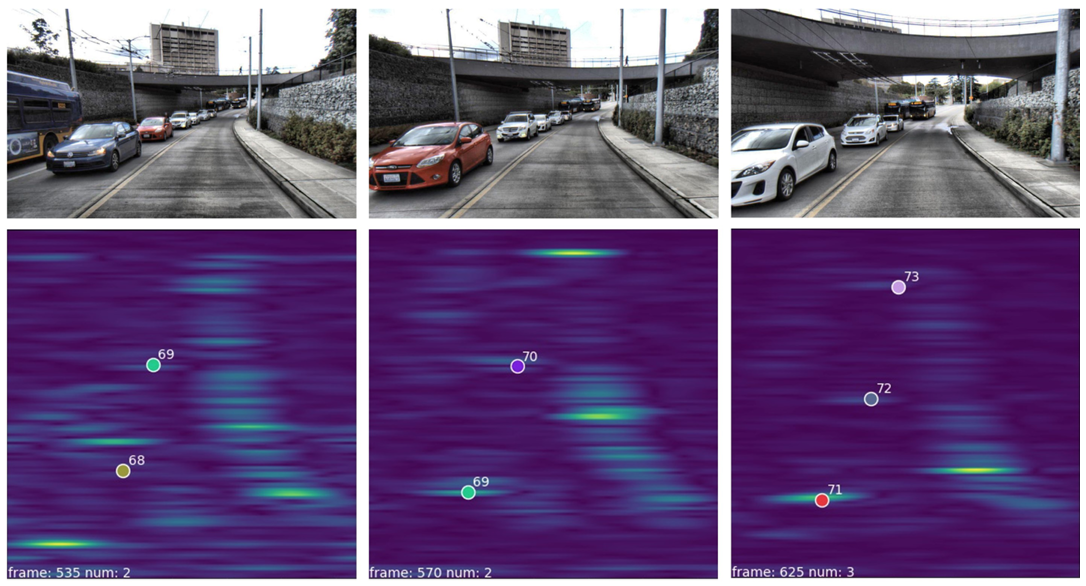

we introduce the CRUW3D dataset, including 66K synchronized and well-calibrated camera, radar, and LiDAR frames in various driving scenarios. Unlike other large-scale autonomous driving datasets, our radar data is in the format of radio frequency (RF) tensors that contain not only 3D location information but also spatio-temporal semantic information.

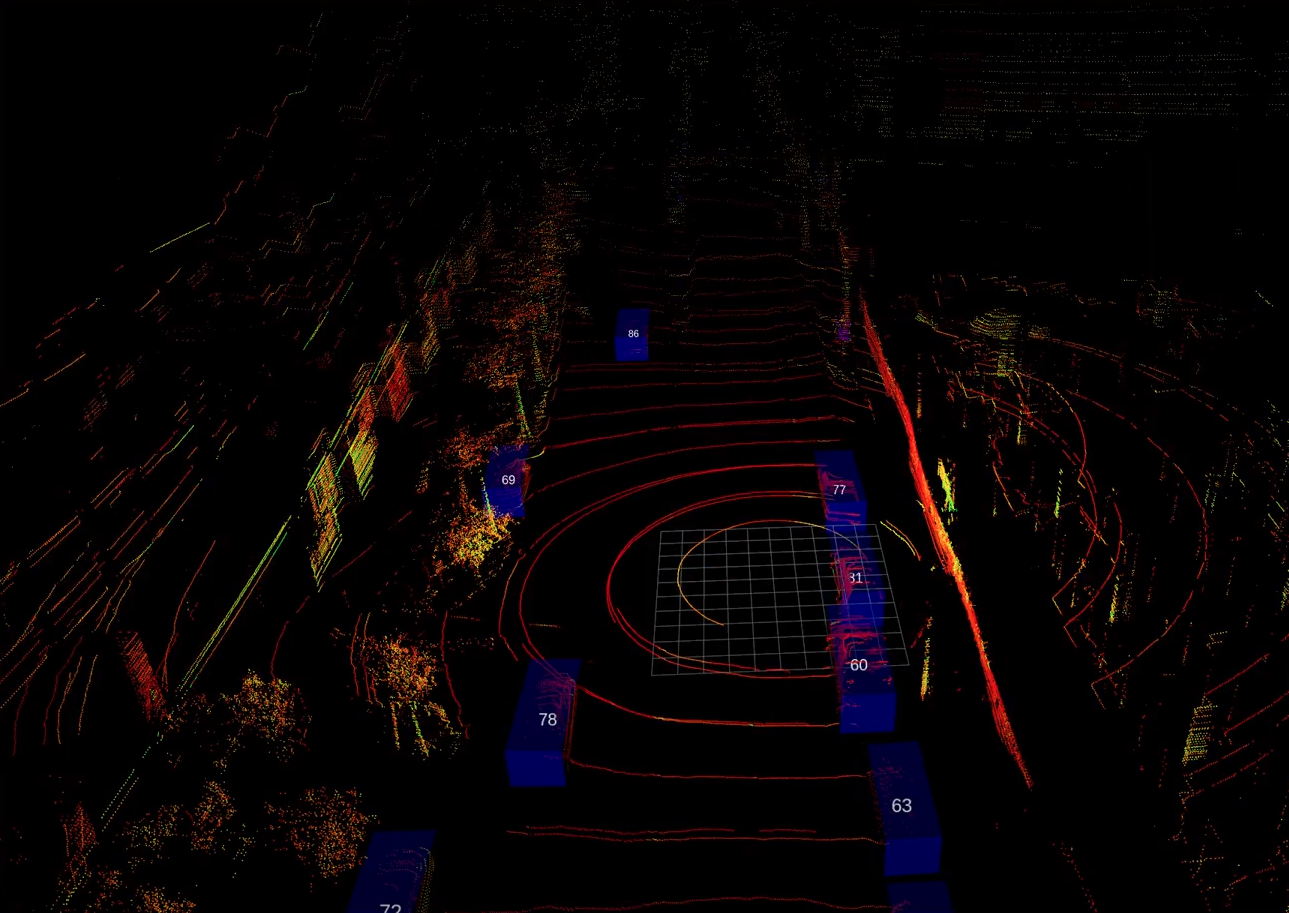

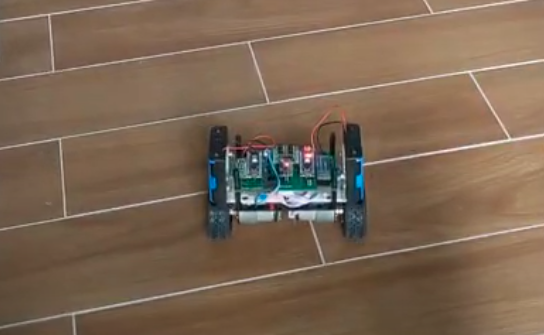

PaperCross-Modal Contrastive Learning of Representations for Navigation Using Lightweight, Low-Cost Millimeter Wave Radar for Adverse Environmental Conditions

IEEE Robotics and Automation Letters - 2021

Jui-Te, Huang, Chen-Lung Lu, Po-Kai Chang, Ching-I. Huang, Chao-Chun Hsu, Po-Jui Huang, and Hsueh-Cheng Wang

we propose the use of single-chip millimeter-wave (mmWave) radar, which is lightweight and inexpensive, for learning-based autonomous navigation. However, because mmWave radar signals are often noisy and sparse, we propose a cross-modal contrastive learning for representation (CM-CLR) method that maximizes the agreement between mmWave radar data and LiDAR data in the training stage to enable autonomous navigation using radar signal.

Paper Video Github More...A Heterogeneous Unmanned Ground Vehicle and Blimp Robot Team for Search and Rescue using Data-driven Autonomy and Communication-aware Navigation

Journal of Field Robotics - 2022

DARPA SubT Challenge - Urban Circuit 2020

Chen-Lung Lu*, Jui-Te Huang*, Ching-I Huang, Zi-Yan Liu, Chao-Chun Hsu, Yu-Yen Huang, Siao-Cing Huang, Po-Kai Chang, Zu Lin Ewe, Po-Jui Huang, Po-Lin Li, Bo-Hui Wang, Lai-Sum Yim, Sheng-Wei Huang, MingSian R. Bai, and Hsueh-Cheng Wang(*Equal Contribution)

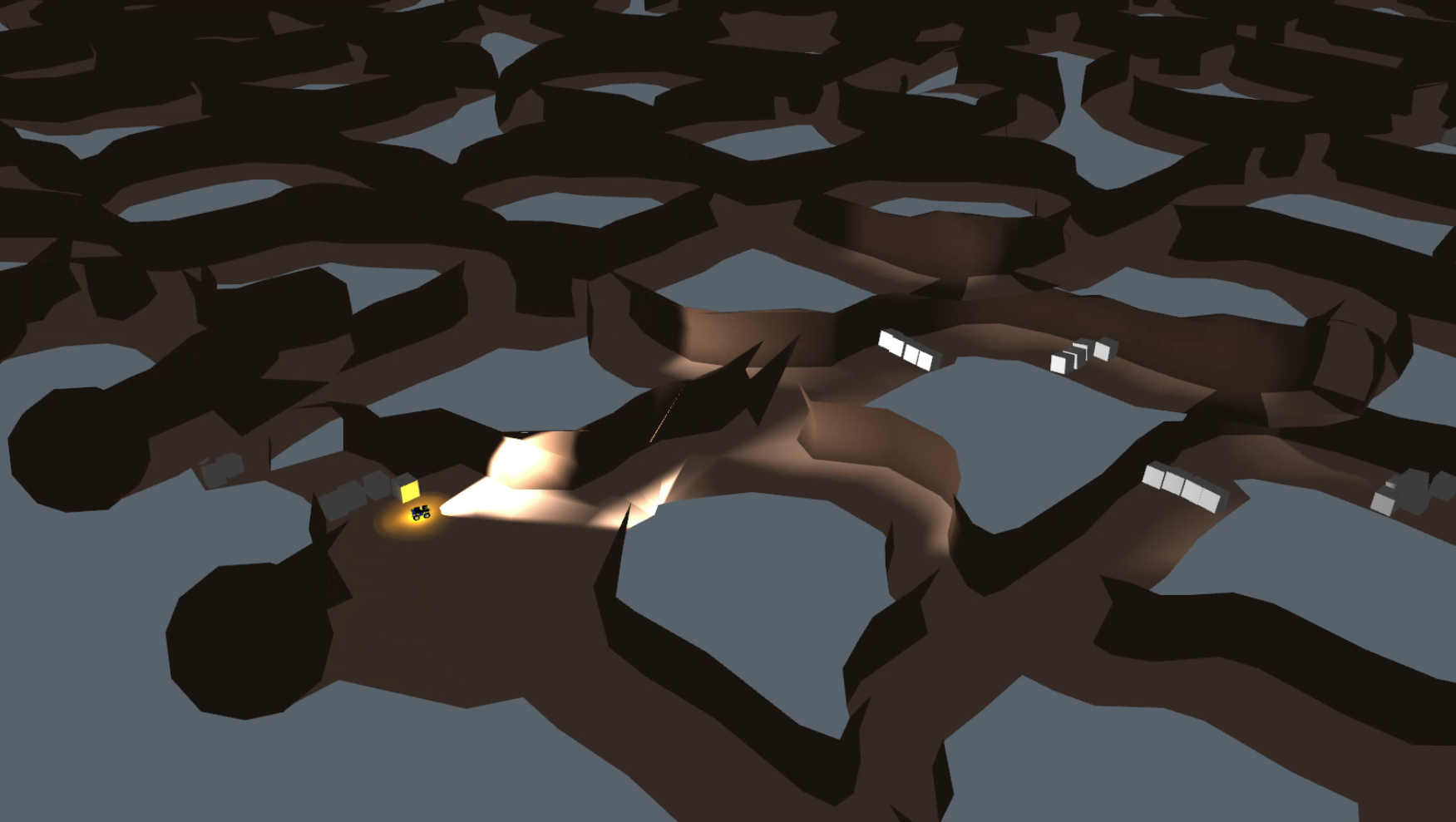

The DARPA Subterranean (SubT) Challenge aims to develop innovative technologies that would augment operations underground. The SubT Challenge will explore new approaches to rapidly map, navigate, search, and exploit complex underground environments. I participated in the Urban Circuit as the software team lead and human supervisor. We built our robots to perform search and rescure mission in a unfinished nuclear power plant.

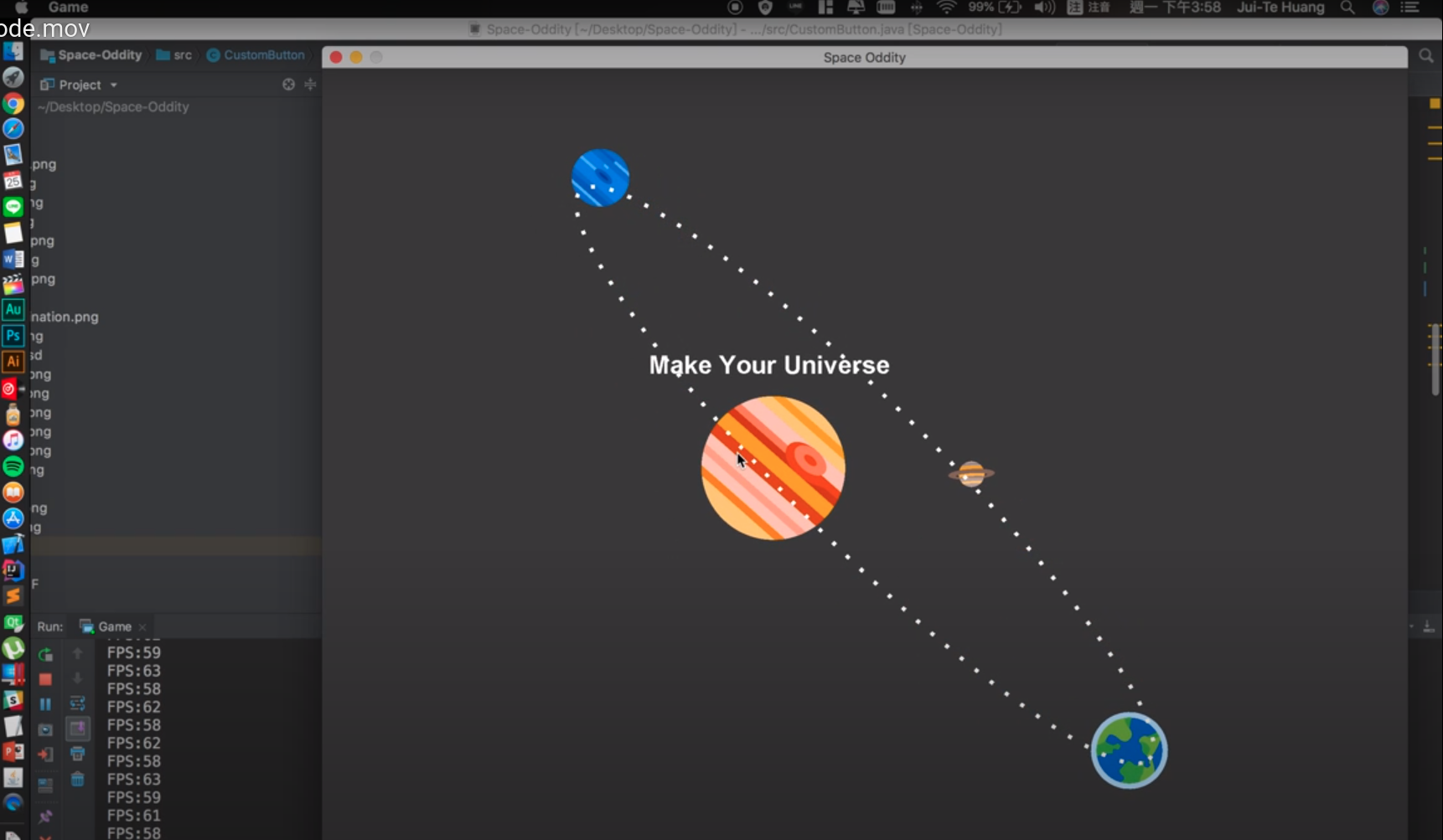

Paper Video More...Duckiepond: An Open Education and Research Platform for a Fleet of Autonomous Maritime Vehicles

IEEE/RSJ International Conference on Intelligent Robots and

Systems - 2019

Sixth Biennial Meeting of MOOS Development and Application

Working Group, Cambridge MA, August, 2019

Ni-Ching Lin, Yu-Chieh Hsiao, Yi-Wei Huang, Ching-Tung Hung, Tzu-Kuan Chuang, Pin-Wei Chen, Jui-Te Huang, Chao-Chun Hsu, Andrea Censi, Michael Benjamin, Chi-Fang Chen, Hsueh-Cheng Wang

This project is to help the people who are interested in marine robotics. We built a low-cost surface vehicle called duckieboat. Our vehicles are compatible with machine learning frame works. We demonstrate the implementation of classic autonomous navigation algorithms and tracking vehicles using deep learning computer vision and sensors on board.

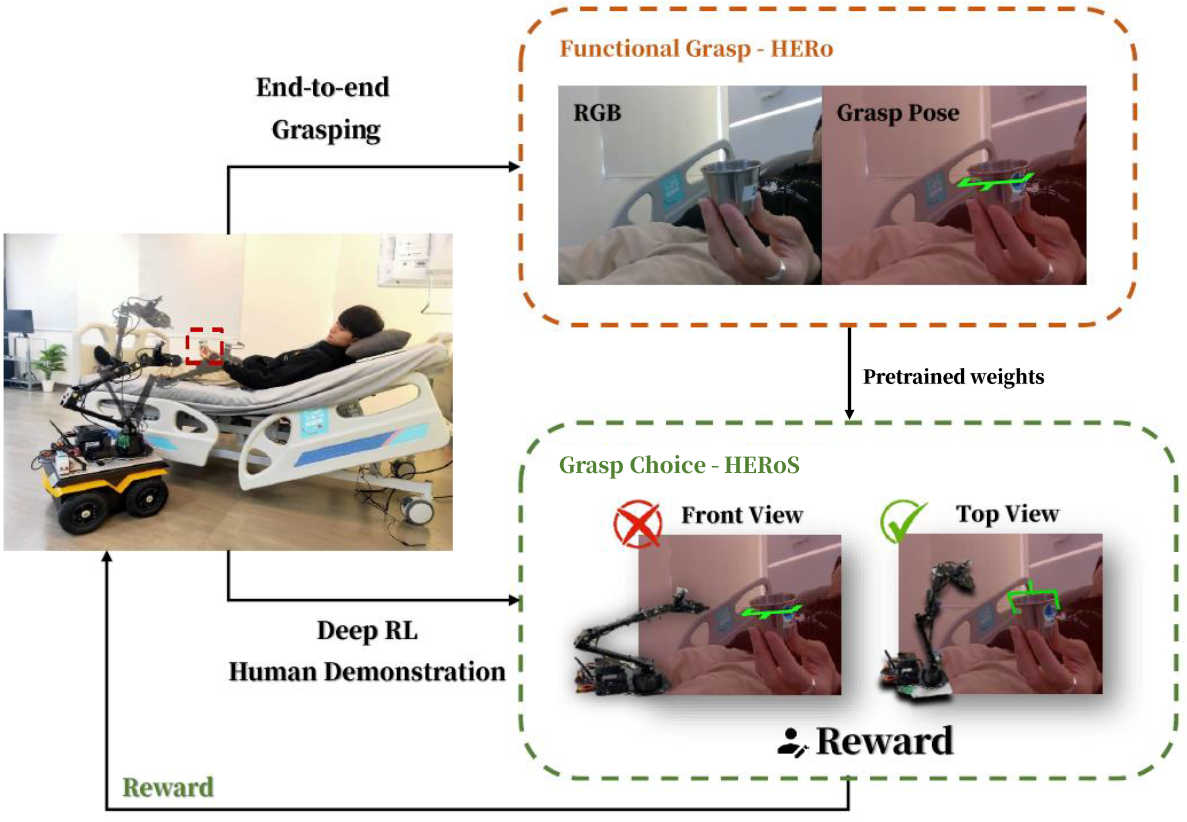

Paper Web Github More...Human-to-Robot Handover via Socially-Aware End-to-End Grasping

2021

We present our socially-aware end-to-end grasping for human-to-robot handover. We first leverage existing end-to-end grasping as network backbone, and then finetune for non-invasive grasps and trajectories using sample efficient deep reinforcement learning. Comprehensive evaluations are carried out against various recent baselines using multi-stage hand and object prediction and subsequent planning.

Report Video WebDARPA SubT Challenge - Tunnel Circuit

Tunnel Circuit - Pittsburgh - 2019

The DARPA Subterranean (SubT) Challenge aims to develop innovative technologies that would augment operations underground. The SubT Challenge will explore new approaches to rapidly map, navigate, search, and exploit complex underground environments. I participated in the Tunnel Circuit with my team and our robots to perform search and rescue mission in a mine tunnel.

Arxiv More...Maritime RobotX Challenge

Hawaii- 2018

We participated in the 2018 Maritime RobotX Challenge and awarded number 5th at the final stage. We built a surface vehicle to solve multiple tasks including autonomous docking, navigation, obstacle avoidance, launch & recovery, scan code and acoustic pinging.

Arxiv Web More...